By Sabrina Souza, in collaboration with Carlos Garcia and Rodrigo Bassani

- Kubernetes is a container orchestration platform that is widely used to manage and scale cloud applications.

- Its use offers many advantages, but also trade-offs that must be weighed and considered by the organization before implementation.

- This article explores use cases and the reasons why Kubernetes is a powerful ally in a company’s cloud journey.

The cloud is one of the main pillars of digital transformation, allowing companies of all sizes to access powerful, scalable and flexible computing resources without having to invest in their own infrastructure. Kubernetes goes hand in hand with digital transformation in the cloud and the fundamentals behind it.

Kubernetes is a container orchestration platform widely used to manage and scale cloud applications. Therefore, the cloud and Kubernetes together are a powerful combination for business scalability and flexibility.

But how do you scale your journey to the cloud with Kubernetes? In this article, we are going to understand some of the scenarios a company might face when making the decision to migrate an application to the cloud, explore some of the key considerations to help you plan and implement an effective strategy. Before that, let’s take a step back and get a better understanding of what Kubernetes is, what you can do with it and the trade-offs for choosing it; and finally, two cases to help us understand its applicability in solutions.

Scenarios for migrating an application to the cloud

Migrating to the cloud can bring many benefits to companies, from cost savings to greater efficiency and scalability; however, assessing the business drivers behind the decision is a critical success factor. There are also scenarios that make migrating an application to the cloud unfeasible.

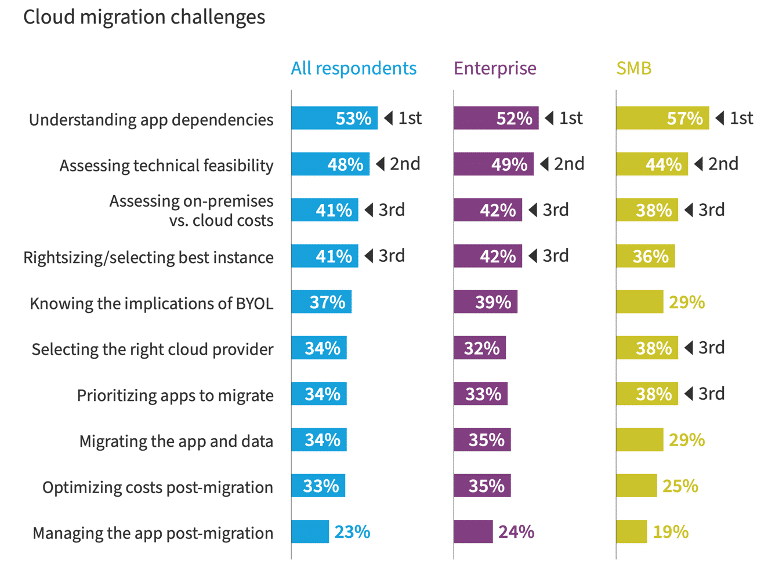

One of the main business drivers for migrating an application is cost. Migrating efficiently can be a way of reducing operational and infrastructure costs. Although not considering some non-functional requirements, hidden from the evaluation of the migration scenario, can be a trap, as the Flexera report points out. The document describes the main challenges of migration, such as understanding the application’s dependencies, technical feasibility, comparing the TCO (Total Cost of Ownership) on-premises vs cloud, among others.

In short, having a strategy is important to achieve the desired result of really reducing costs.

Source: Flexera

Another common bias is security. Some companies may be concerned about the security of their data in the cloud and reluctant to migrate for fear of a possible data leak or security breach. However, cloud service providers have strict security measures in place and offer higher levels of security than on-premises IT systems.

We can also mention as a bias the control. Some companies may prefer to keep their IT systems on premises to support greater control over their data and processes. But the cloud can offer greater flexibility and scalability, allowing companies to focus on their core business rather than having to manage on-premises IT systems.

Compliance bias can appear as another obstacle. Some companies may be subject to specific regulations and have compliance requirements that need to be met. Yet, many cloud service providers can help companies achieve regulatory compliance.

Finally, late adoption bias can prevent a company from joining the cloud. Some companies may be late in adopting it and not be aware of all the benefits the cloud can offer, thus missing opportunities for innovation, efficiency and cost savings.

To conclude this topic, it is crucial to mention an extremely important issue, which is the Cloud Adoption Framework (CAF). This is a complete guide to the efficient and secure adoption of cloud computing, covering technical, organizational and operational aspects. Focused on governance, skills, architecture, security, operations and cost optimization, the CAF helps companies align the cloud with their goals, promoting collaboration and driving innovation. The main cloud platforms offer services on CAF, such as the AWS Cloud Adoption Framework.

What is Kubernetes and what can you do with it?

Kubernetes is an open-source platform designed to orchestrate the deployment, scaling and management of containerized applications. In other words, with it we can not only deploy an application, but also scale it horizontally (vertically too) and manage it; all using containers.

Kubernetes appeared as a solution to the demand for increased use of containers to package and distribute applications and services. It allows the team responsible (which could be DevOps engineers, software architects or even software developers) to deploy and manage containerized applications efficiently and consistently in a cluster.

In addition, Kubernetes is highly modular and flexible, enabling it to be used in a variety of deployment scenarios, from public cloud deployments to private data center deployments. In the cloud, its use is standard regardless of which cloud provider is used, what is a strong plus point, as you do not have to learn how to use it for each provider you use.

But what about scalability? One of the main benefits of Kubernetes is precisely its ability to scale applications quickly and efficiently, adding or removing containers according to demand. This allows applications to be scaled according to business needs, improving performance and availability.

Containers run inside objects called pods. A simple and practical way to horizontally scale your application would be to increase the number of pods running in your containers.

We can increase resources without too many complications, but will the traffic “know” which resource to route to? Kubernetes provides that too! It has an easily configurable load balancing mechanism. As soon as you create a service with default load balancing, traffic is automatically distributed among the available pods. There are three types of load balancing, and you can choose the best choice to meet your application’s needs.

How does Kubernetes work technically?

When you deploy Kubernetes, you get a cluster, which, in turn, consists of a set of worker nodes. These nodes host the pods, which are the basic unit for deploying an application.

Kubernetes has a logical part called the Control Plane that manages the nodes and pods in the cluster; this is why it is considered the brain of a Kubernetes cluster.

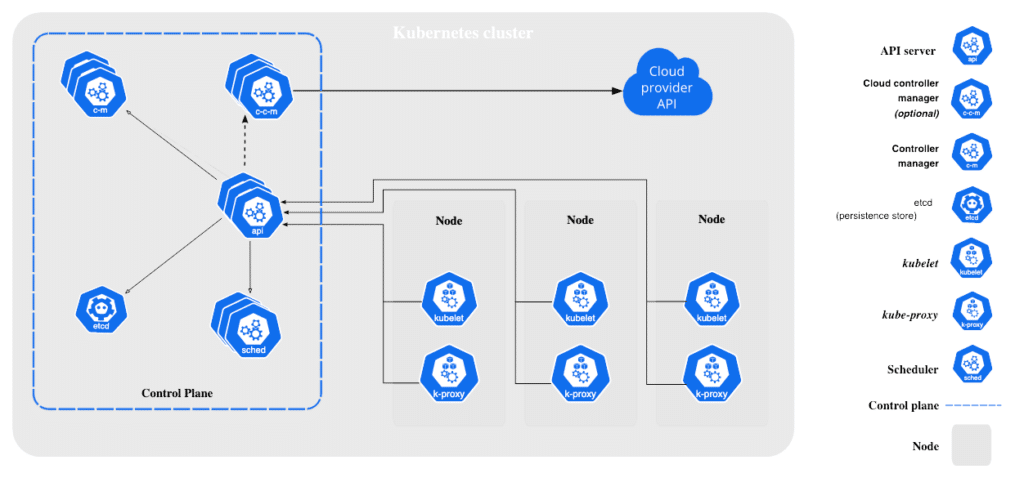

The following image shows the components of the Kubernetes architecture and how they communicate with each other:

At the brain of Kubernetes is the API server, which provides an interface for the user or other Kubernetes components to interact with the system. There you can also find the etcd, which is a distributed database that stores the cluster’s status information.

Next, as you can see in the image, each node holds the kubelet, an agent that manages the containers running on the node, and the kube-proxy, which provides a network proxy for the pods deployed on the node.

The containers are deployed on the pods and there can be one or more containers on the same pod. In practice, the most common and recommended way to deploy a pod is through a deployment manifest, which specifies the pod’s characteristics, such as the container image, the exposed ports, the environment variables and the resources needed, like CPU and memory.

The manifest is then sent to the Kubernetes API Server, which handles storing the pod’s configuration and propagating the change to other components. Then, the Kubernetes component called scheduler determines the best node in the cluster to deploy the pod based on its resource needs and the available nodes.

The kubelet component on each node in the cluster is responsible for downloading the container image from the container registry, creating and starting the containers and ensuring that they are running and communicating correctly with other containers in the same pod and with services outside.

Kubernetes offers advanced features for managing the deployment of pods, deployments and services. The former has several fundamental roles, such as defining and managing the number of pod replicas running, listening for changes in specifications and updating all replicas when changes occur; as well as having options for rolling update rules, rollbacks, fault management and much more. Services, on the other hand, are an abstraction layer that defines a set of pods and enables them to be exposed to external traffic; it performs load balancing, stable IP addressing, and its behavior can be altered according to the type defined and the needs of the solution.

What are the trade-offs when choosing Kubernetes?

When choosing Kubernetes as a platform for managing and orchestrating containerized applications, you need to consider the trade-offs involved. As we have seen, Kubernetes is a powerful, highly scalable and flexible platform, but it is also complex and requires advanced technical skills to manage it.

For this reason, one of the most significant trade-offs of Kubernetes is its complexity. Learning to use it correctly can take time and effort. Managing a Kubernetes cluster requires expertise in programming, system administration and network infrastructure. In addition, adopting Kubernetes can entail more costs, such as the need for dedicated servers and storage.

On the other hand, Kubernetes is highly scalable and can handle heavy workloads. But to take advantage of Kubernetes’ full scalability potential, proper cluster configuration and optimization of resources for efficient use are needed.

Security is also an important trade-off when using Kubernetes. Although it is a secure platform by default, it needs to be configured correctly to ensure that the infrastructure is secure. The proper configuration of networks, authentication and authorization is essential, as is the implementation of security best practices at all levels of the technology stack.

If flexibility is a requirement for your application’s architecture, it is important to add it into the trade-offs. Kubernetes is highly flexible and can be configured to meet a wide variety of use cases. However, configuration can be complicated and requires advanced technical knowledge.

Finally, maintenance is another trade-off to consider. Kubernetes demands constant maintenance to ensure that the cluster is working properly and up to date with the latest versions. This includes applying security updates, troubleshooting and resolving network and infrastructure problems. As a counterpoint, there are managed Kubernetes services offered by some platforms that make this maintenance significantly easier. One example is AWS’s Elastic Kubernetes Service (EKS), which provides an environment for deploying, managing and scaling containerized applications using Kubernetes on the AWS infrastructure.

To use or not to use Kubernetes?

Scenario 1

The company has meeting rooms and wants to build a simple, one-off web application that allows its employees to book them when necessary. They do not expect many simultaneous accesses, neither do they expect to expand the application or project a scale horizontally or load balance. Detail: the company already has a database and persistence will be done on it; in other words, we do not need to provide or manage the database either.

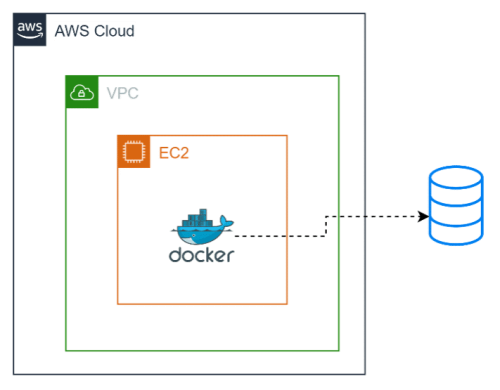

Therefore, the solution is simply a web application, which in turn has been packaged in a Docker container, leaving the architecture for this project as follows:

- A hosting server to run the application; and

- A Docker container for the blog’s web application.

Assuming that the solution would be on the AWS platform, one way of representing it in a diagram would be:

Analyzing this simple architecture, would it be necessary to use Kubernetes? The answer is no. Its use would bring complexity, expense and long execution times. As we basically only have one element, which is a Docker container, it can be easily configured on a machine like EC2. And if vertical scaling is needed, it would be enough to increase the capacity of the hosting server. All this without even adding the extra complexity of Kubernetes.

Scenario 2

A company with around 1,000 employees has a very specific and customized performance appraisal process. Because of this, it decided to develop a technological solution that would meet all their needs. The result was the following elements:

- Authentication/authorization microservice.

- Microservice for registering stages and forms.

- Microservice for the written assessment stages.

- Microservice for integrating the results with the company’s ERP (integrated business management system) and other HR systems.

- Front-end web application for accessing the features of the assessment process.

- PostgreSQL database.

Each of these elements is converted into a Docker image. The complete solution needs to be available all year round, as human resources employees need access to make all the necessary preparations for the performance appraisal. However, twice a year the number of accesses increases exponentially, which is when the evaluation period takes place. The company takes the high availability of the application very seriously.

Given this scenario, would it be right to use Kubernetes to provide and manage the complete solution from this case? The answer is yes!

The solution includes several containers, the need for load balancing, high availability, increased horizontal ability at certain times and fault management. These are all requirements that, without the use of Kubernetes, would be difficult to meet; and, as we have seen, all of these characteristics are met by Kubernetes, with just the right configuration.

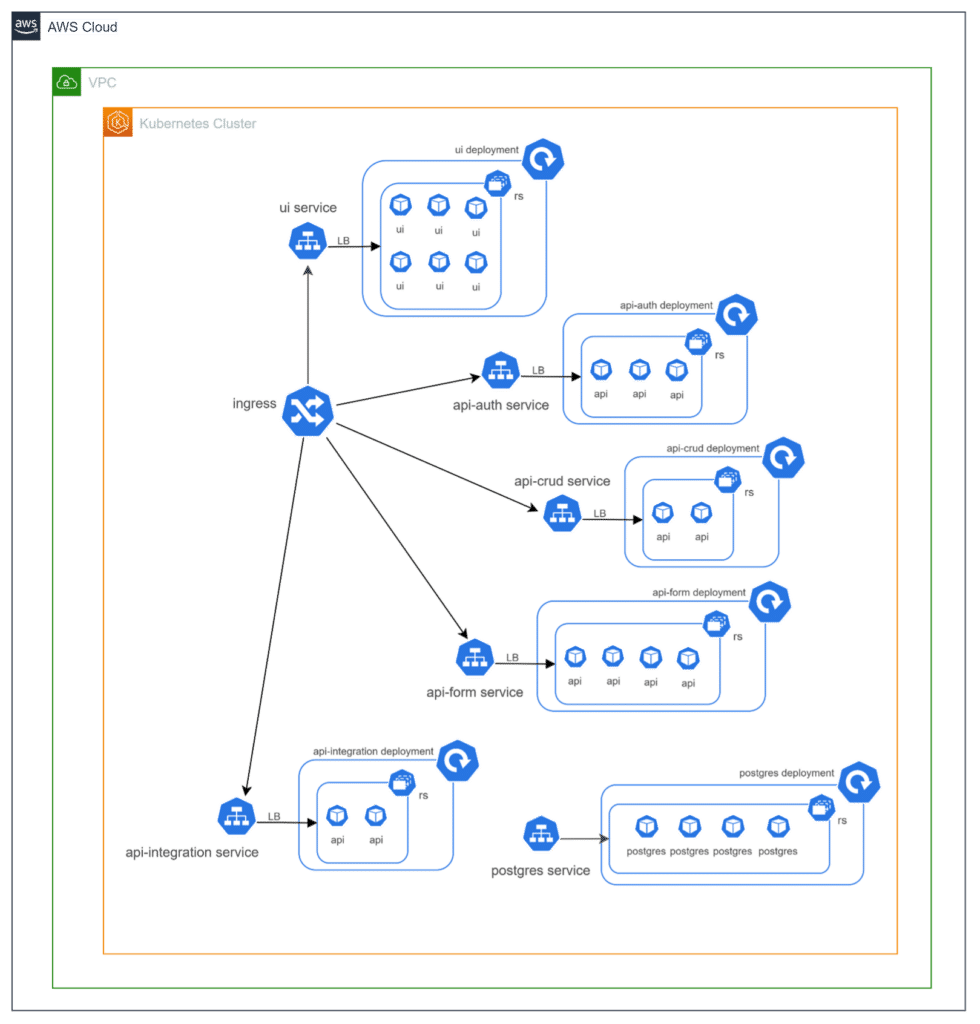

Assuming that the solution would be on the AWS platform, one way of representing it in a diagram would be:

At this point, we can do some explaining:

- The diagram could have been done in n different ways, but we were concerned with bringing a Kubernetes service view, representing the quantities of pod replicas that each layer could have;

- Each layer was abstracted by a service that performs load balancing (LB) between the pods (represented by “ui”, “api” and “postgres”);

- The ReplicaSet (rs) guarantees the number of pod replicas defined in the manifest;

- The deployment ensures that the replicas are managed; and

- With the architectural solution modularized and distributed in this way, we guarantee all the requirements, as well as various other benefits such as easy access to logs and configurations, maintenance via code (manifests), ease of deploying new versions, and much more!

How to scale your journey to the cloud with Kubernetes?

Now that we know a little more about what Kubernetes is, what you can do with it, its features and functionalities, we can talk about how you could conceive of a journey to the cloud with Kubernetes. This journey could be followed in nine objective and well-defined steps that we dissect in the topics below.

Study and understand the tool

To use Kubernetes effectively when scaling to the cloud, it is essential to carry out a thorough study of the tool. You need to understand basic concepts, architecture, commands and tools, and good practices and standards to guarantee the security and efficiency of your applications. This will enable you to use Kubernetes effectively, guaranteeing the efficient and scalable execution of applications in the cloud.

Planning

Before you start using Kubernetes, you need to plan how to use it in the most efficient way to meet your needs. Consider factors such as the amount of traffic you expect to receive, the processing ability required to run your applications and which Kubernetes features are best suited to your needs.

Choose a cloud platform

As mentioned in the earlier section, Kubernetes can run on various cloud platforms, including Amazon Web Services, Google Cloud Platform and Microsoft Azure. At this stage, you will need to choose a platform that meets your needs and offers support for Kubernetes.

Creating a Kubernetes Cluster

A Kubernetes cluster consists of a set of virtual (or physical) machines that run Kubernetes and can be used to run your applications. In this step you will need to create a Kubernetes cluster on your chosen cloud platform.

Deploying containers

Kubernetes is a container orchestration platform, so you will need to create your containers and then deploy them on your Kubernetes cluster. There are several tools available to help you create and manage containers, including Docker.

Traffic management

In the previous section, we mentioned some of the load balancing benefits that Kubernetes’ Service feature offers. But at this stage you will need to go further, because traffic management is one of the most important features of an application. Bear in mind that Kubernetes offers features, such as Services and Ingresses, to expose applications inside and outside the cluster and load balance services, as well as advanced traffic routing with Canary Deployment. Efficient traffic management ensures that applications are available and working reliably, even in high demand, and helps to reduce infrastructure costs.

Monitoring and logging

Monitoring and logging are important steps in application management. Tools such as Prometheus, Grafana and Elasticsearch allow you to collect and visualize important metrics and logs from different pods and containers in one place. Moreover, they support Kubernetes. By closely monitoring application performance and logs, you can identify problems quickly and minimize downtime, as well as showing opportunities for optimizing infrastructure and applications.

Automation

Automation is a fundamental step in scaling to the cloud with Kubernetes, as it allows you to automate the management of infrastructure and applications. Kubernetes offers tools such as Helm and Operators to create reusable application packages and automate the management of complex applications, respectively. In addition, Kubernetes provides automation features such as the automatic deployment of updates and automatic scaling based on application demand, helping to ensure the continuous availability of applications and the efficient scalability of the infrastructure. Automation can also reduce costs and improve operational efficiency, freeing up resources for other important tasks.

Training

Training can be the closing stage of this journey, as the technology is complex and requires specific technical knowledge to deploy and manage applications. The team should have a good understanding of basic and advanced Kubernetes concepts, as well as monitoring and logging tools, and Kubernetes certifications can help assess the team’s technical expertise. Constant training is essential to keep the team up to date with changes in technology and best practices for security and operational efficiency.

Conclusion: the importance of a structured approach

In conclusion, scaling to the cloud with Kubernetes can offer companies many benefits, such as scalability, agility, operational efficiency, modernity and architectural elegance. To ensure success on this journey, it is important to follow a structured approach, which includes studying and understanding the tool, choosing the cloud platform, traffic management, monitoring and logging, automation and constant team training.

With the right approach, companies can make the most of the benefits of Kubernetes and overcome the challenges the technology presents, like the complexity and the steep learning curve. Kubernetes is a technology that encompasses a universe of resources and is constantly evolving. The journey to the cloud with it can be a continuous quest for operational excellence and efficiency. With the team’s commitment to continuously learning and improving their Kubernetes skills, the journey can lead to a successful digital transformation of the company.

SABRINA SOUZA works as Developer at EloGroup.

CARLOS GARCIA works as System Architect at EloGroup.

RODRIGO BASSANI works as CTO and Partner at EloGroup.